Collaborative Learning To Generate Audio-video Jointly

Vinod Kumar Kurmi, Vipul Bajaj, Badri N Patro, Venkatesh K Subramanian, Vinay P. Namboodiri, Preethi Jyothi

Indian Institute of Technology Kanpur, University of Bath, Indian Institute of Technology Bombay

Abstract

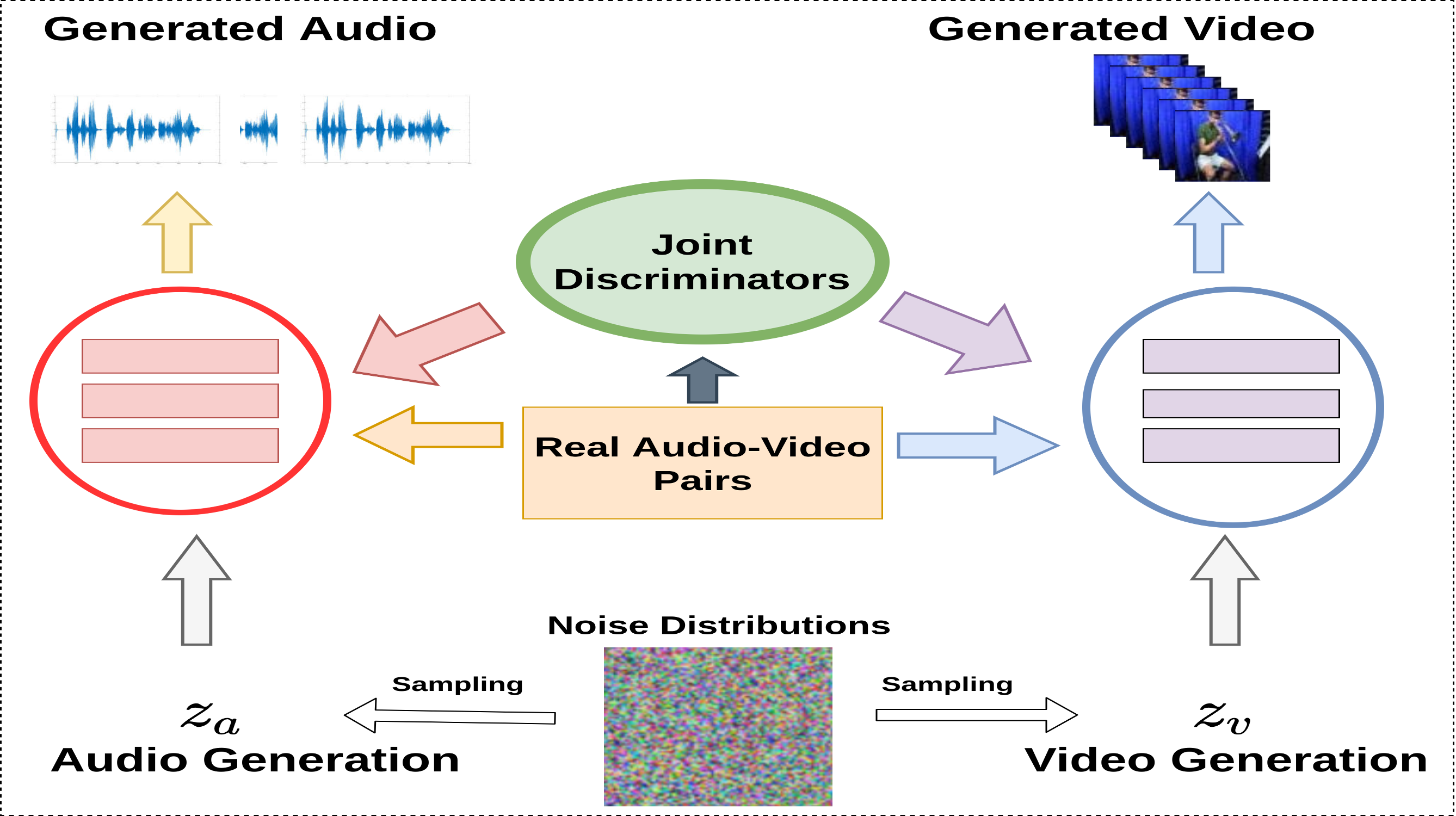

There have been a number of techniques that have demonstrated the generation of multimedia data for a single modality at a time using GANs such as the ability to generate images, videos, and audio. However, so far, the task of multimodal generation of data, specifically for audio and videos both, has not been explored well. Towards this problem, wepropose a method that demonstrates that we are able to generate naturalistic samples of video and audio data by the joint correlated generation of audio and video modalities. The proposed method uses multiple discriminators to ensure that the audio, video, and the joint output are also indistinguishable from real-world samples. We present a dataset for this task and show that we are able to generate realistic samples. This method is validated using various standard metrics such as Inception Score, Frechet Inception Distance (FID) and through human evaluation.

Code Coming Soon!

|

V. K. Kurmi, Vipul Bajaj, Badri N Patro, Venkatesh KS, V. P. Namboodiri, Preethi Jyothi Collaborative Learning To Generate Audio-video Jointly |

BibTex

@InProceedings{Kurmi_2021_icassp,

author = {Kurmi, Vinod K and Bajaj, Vipul and Patro, Badri and K Subramanian,Venkatesh and Namboodiri, Vinay P. and Jyothi, Preethi},

title = {Collaborative Learning To Generate Audio-video Jointly},

booktitle = {IEEE ICASSP,},

month = {June},

year = {2021}

}