Deep Bayesian Network for Visual Question Generation

Badri N. Patro, Vinod Kumar Kurmi , Sandeep Kumar , Vinay P. Namboodiri

Indian Institute of Technology Kanpur

Abstract

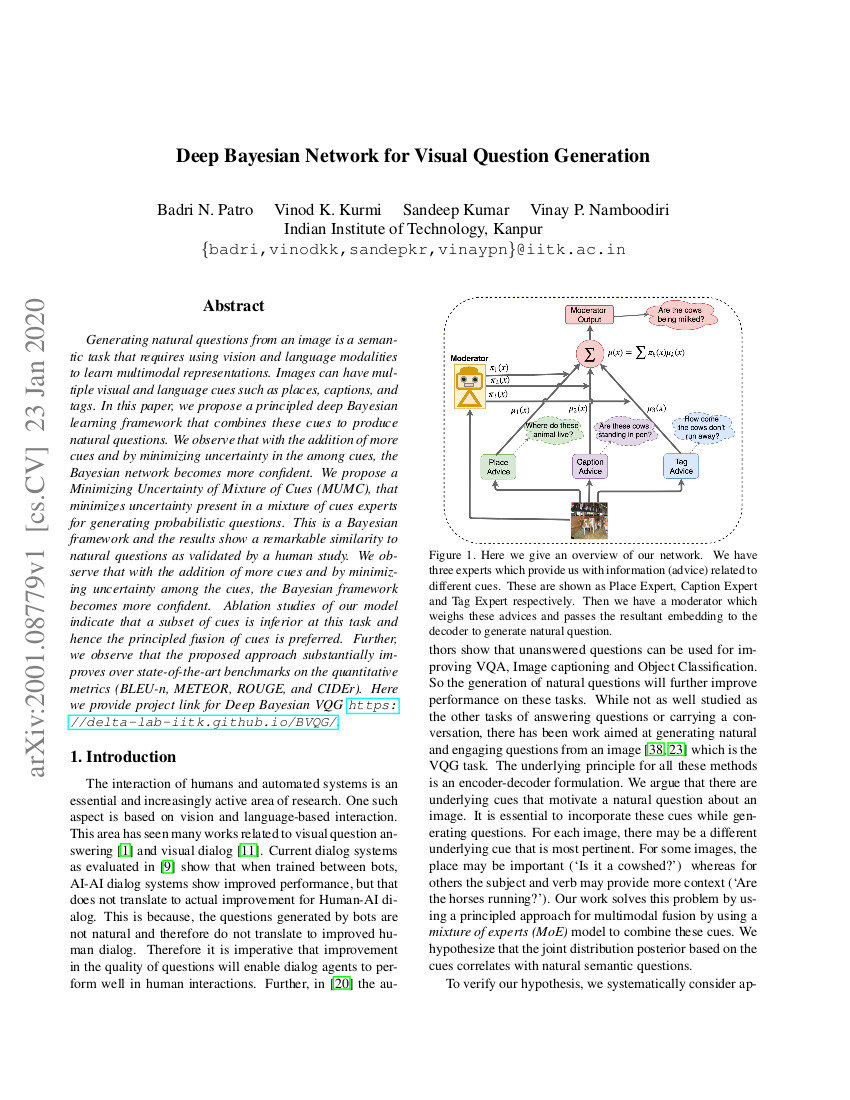

Generating natural questions from an image is a semantic task that requires using vision and language modalities to learn multimodal representations. Images can have multiple visual and language cues such as places, captions, and tags. In this paper, we propose a principled deep Bayesian learning framework that combines these cues to produce natural questions. We observe that with the addition of more cues and by minimizing uncertainty in the among cues, the Bayesian network becomes more confident. We propose a Minimizing Uncertainty of Mixture of Cues (MUMC), that minimizes uncertainty present in a mixture of cues experts for generating probabilistic questions. This is a Bayesian framework and the results show a remarkable similarity to natural questions as validated by a human study. We observe that with the addition of more cues and by minimizing uncertainty among the cues, the Bayesian framework becomes more confident. Ablation studies of our model indicate that a subset of cues is inferior at this task and hence the principled fusion of cues is preferred. Further, we observe that the proposed approach substantially improves over state-of-the-art benchmarks on the quantitative metrics (BLEU-n, METEOR, ROUGE, and CIDEr). Here we provide project link for Deep Bayesian VQG.

tSNE Plots

Visualization

.png)

Code Coming Soon!

|

Badri N. Patro, V. K. Kurmi, Sandeep Kumar, V. P. Namboodiri Deep Bayesian Network for Visual Question Generation |

BibTex

@InProceedings{Patro_2020_WACV,

author = {Patro, Badri N. and Kumar Kurmi, Vinod and Kumar, Sandeep and Namboodiri, Vinay P.},

title = {Deep Bayesian Network for Visual Question Generation},

booktitle = {WACV},

year = {2020}

}

Acknowledgement

We acknowledge the help provided by Delta Lab members, who have supported us for this research activity.